# ORB SLAM Versions

- Campos, C., Elvira, R., Rodríguez, J. J. G., Montiel, J. M. M., & Tardós, J. D. (2020). ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial and Multi-Map SLAM. http://arxiv.org/abs/2007.11898

- https://github.com/UZ-SLAMLab/ORB_SLAM3

- Mur-Artal, R., & Tardos, J. D. (2017). ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Transactions on Robotics. https://doi.org/10.1109/TRO.2017.2705103

- https://github.com/UZ-SLAMLab/ORB_SLAM2

- Mur-Artal, R., Montiel, J. M. M., & Tardos, J. D. (2015). ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Transactions on Robotics, 31(5), 1147–1163. https://doi.org/10.1109/TRO.2015.2463671

- https://github.com/UZ-SLAMLab/ORB_SLAM

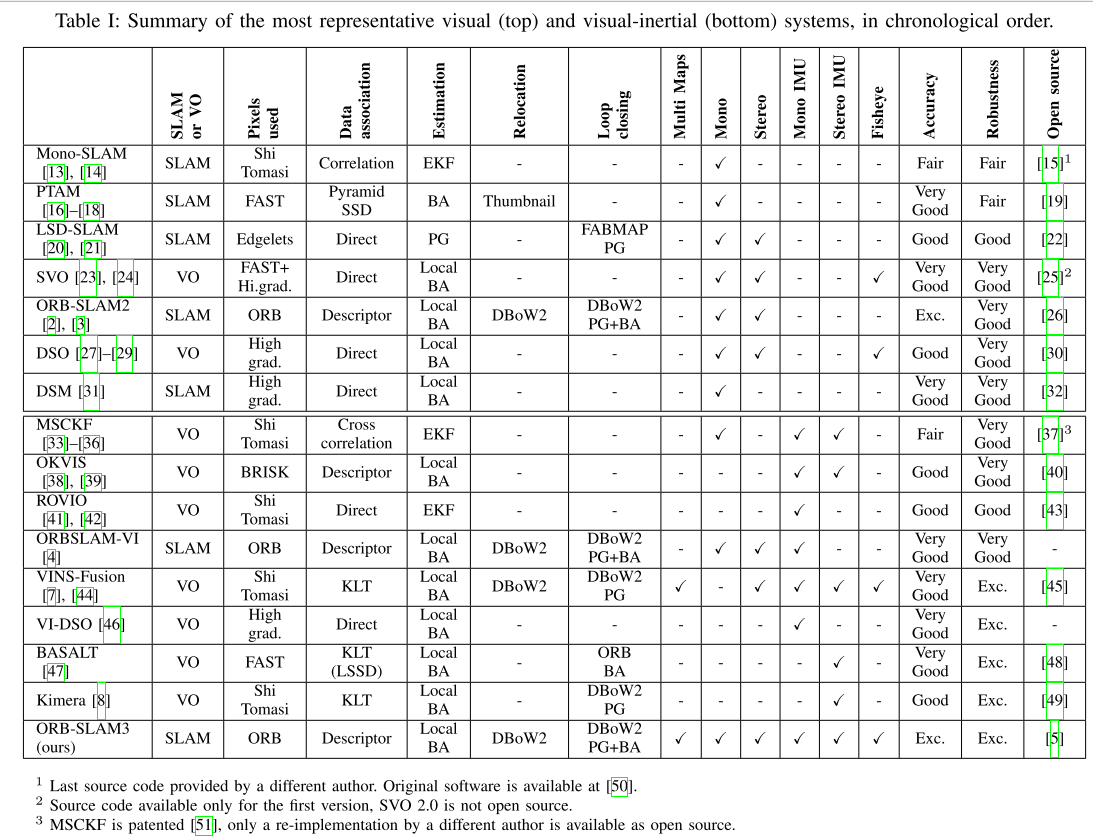

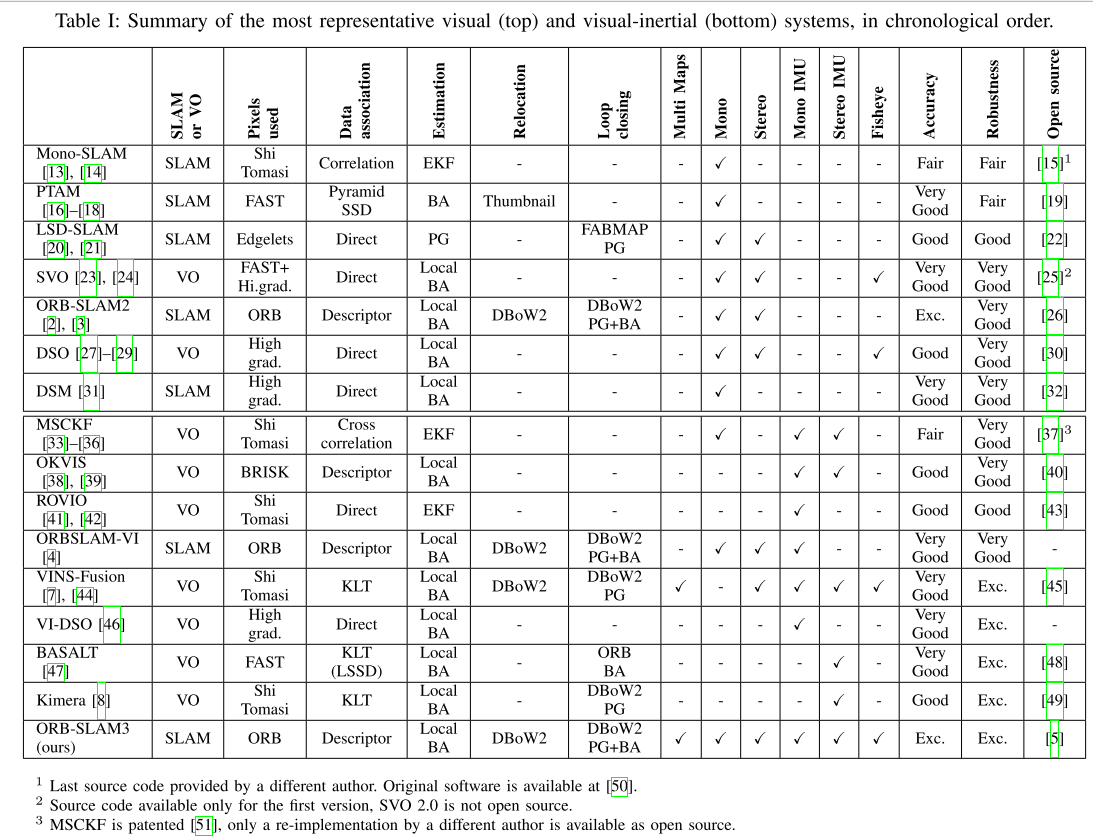

# ORB SLAM3

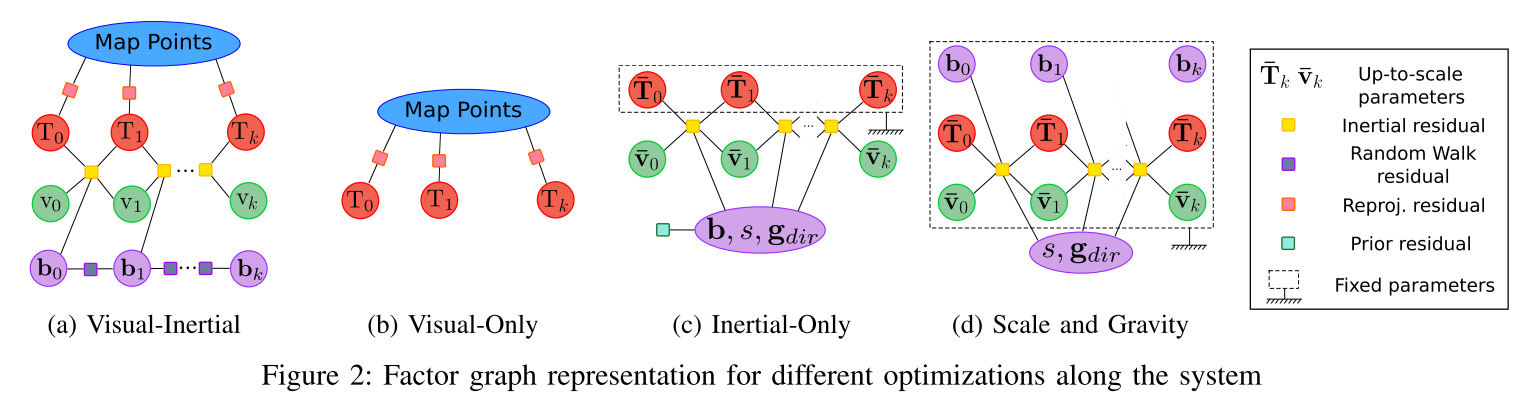

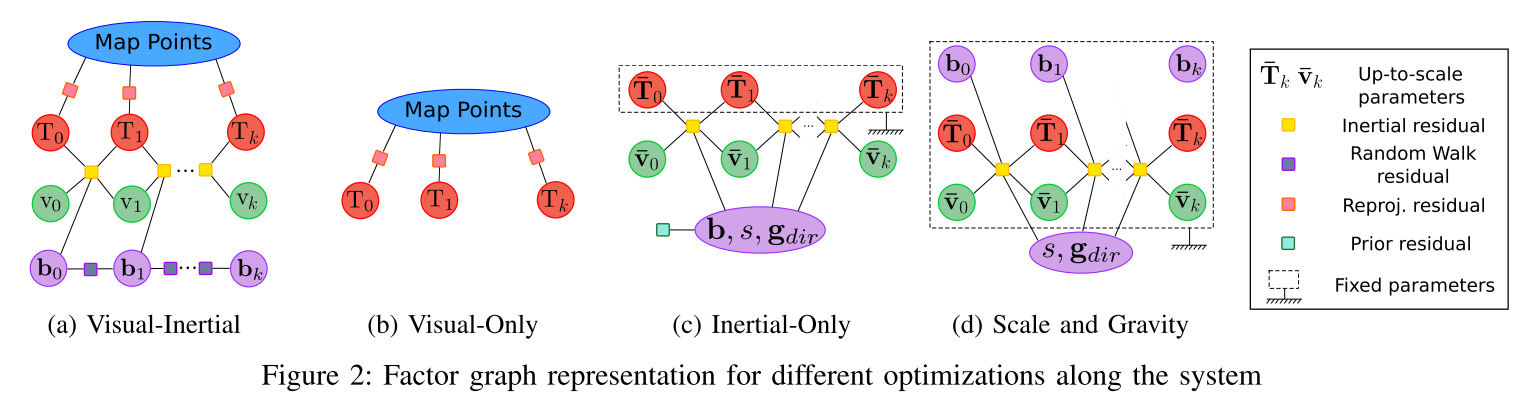

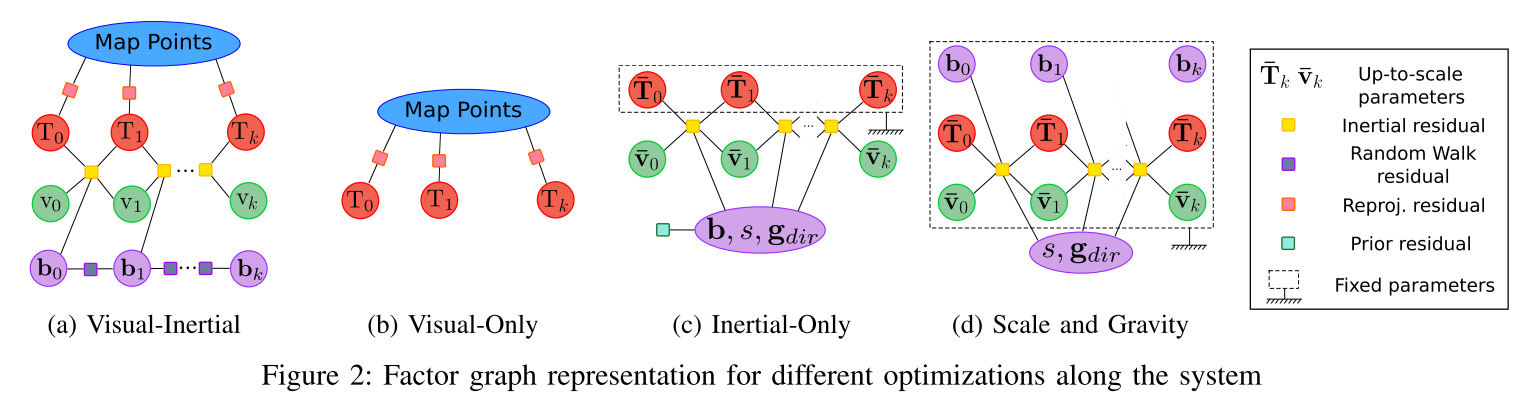

The first main novelty is a feature-based tightly-integrated visual-inertial SLAM system that fully relies on Maximum-a- Posteriori (MAP) estimation, even during the IMU initialization phase. The result is a system that operates robustly in real time, in small and large, indoor and outdoor environments, and is 2 to 5 times more accurate than previous approaches.

The second main novelty is a multiple map system that relies on a new place recognition method with improved recall. Thanks to it, ORB-SLAM3 is able to survive to long periods of poor visual information: when it gets lost, it starts a new map that will be seamlessly merged with previous maps when revisiting mapped areas. Compared with visual odometry systems that only use information from the last few seconds, ORB-SLAM3 is the first system able to reuse in all the algorithm stages all previous information. This allows to include in bundle adjustment co-visible keyframes, that provide high parallax observations boosting accuracy, even if they are widely separated in time or if they come from a previous mapping session.

# ORB SLAM and ORB SLAM2

网上有太多的论文解析,这里引用,整理一些比较容易理解的,概括性的解释。

关于论文的理解:

---

引用 [CSDN - ORB-SLAM2论文解读与总结](https://blog.csdn.net/zxcqlf/article/details/80198298):

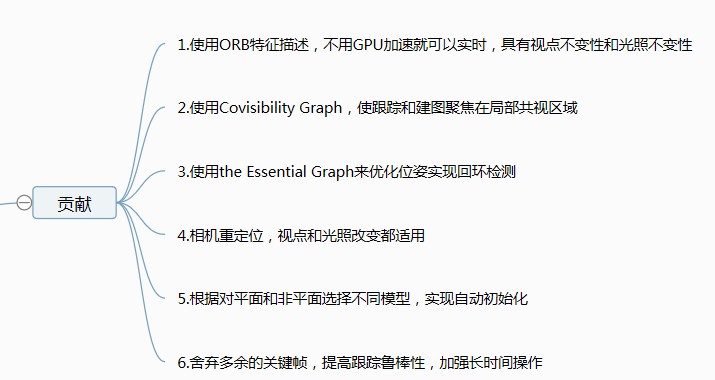

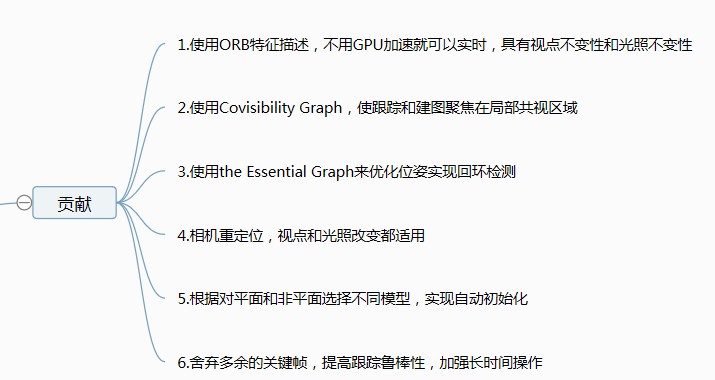

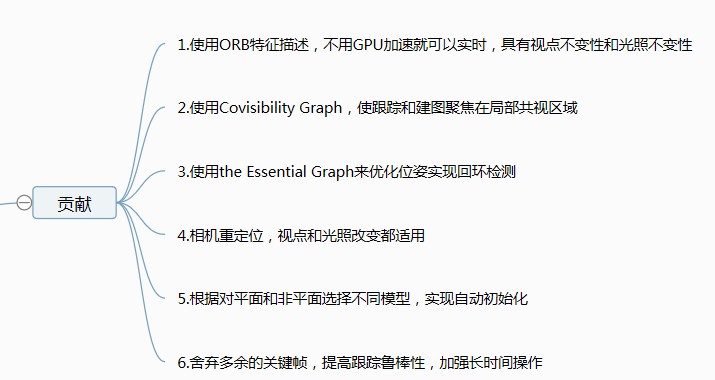

ORB-SLAM2贡献

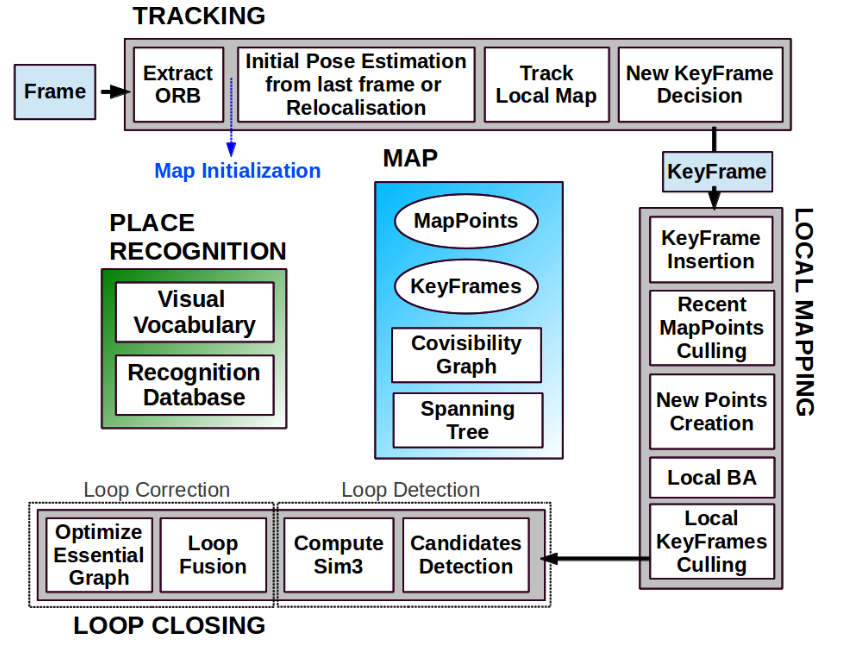

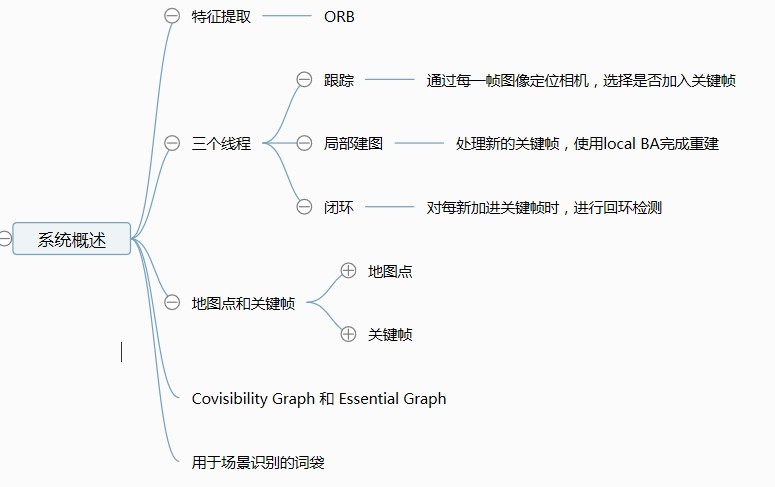

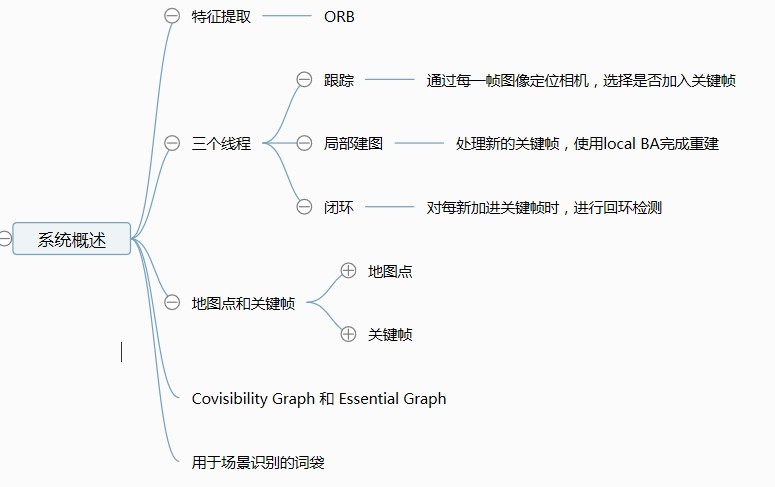

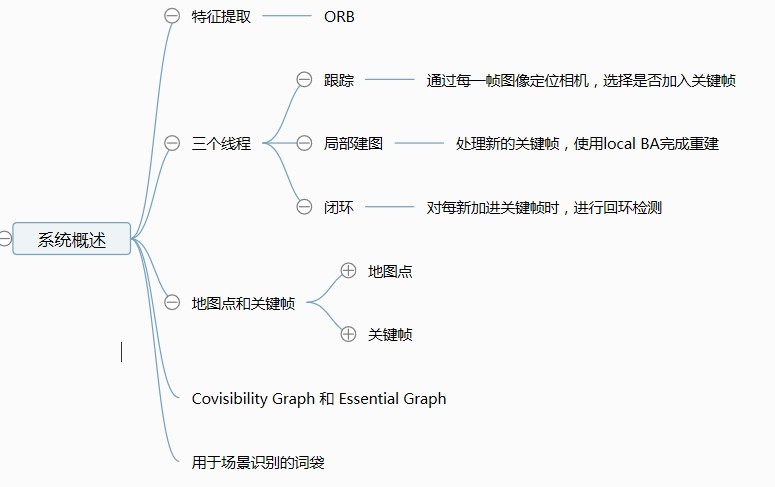

系统架构:

---

引用自 [ORB-SLAM2 论文&代码学习-概览](https://www.cnblogs.com/MingruiYu/p/12347171.html):

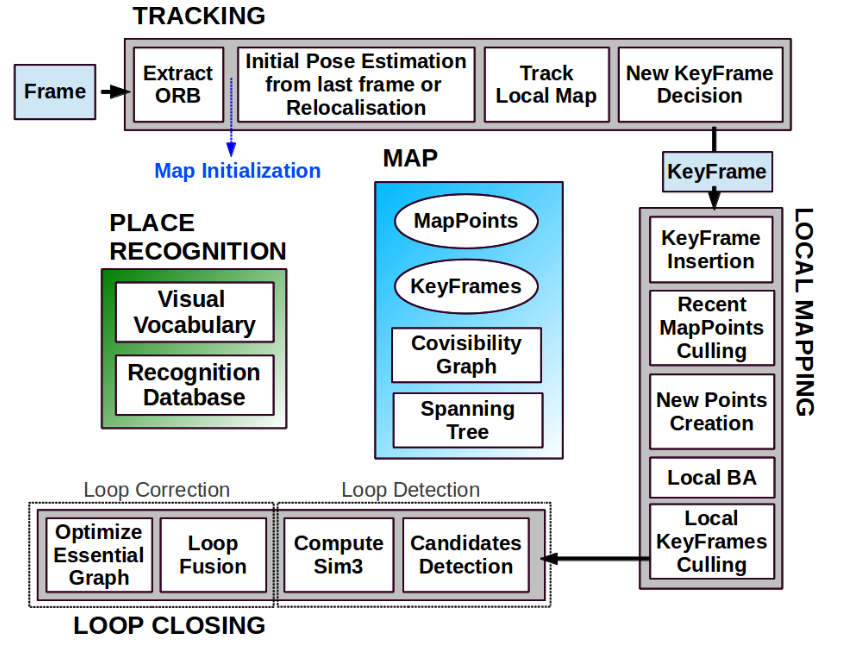

ORB-SLAM 系统同时运行三个线程:

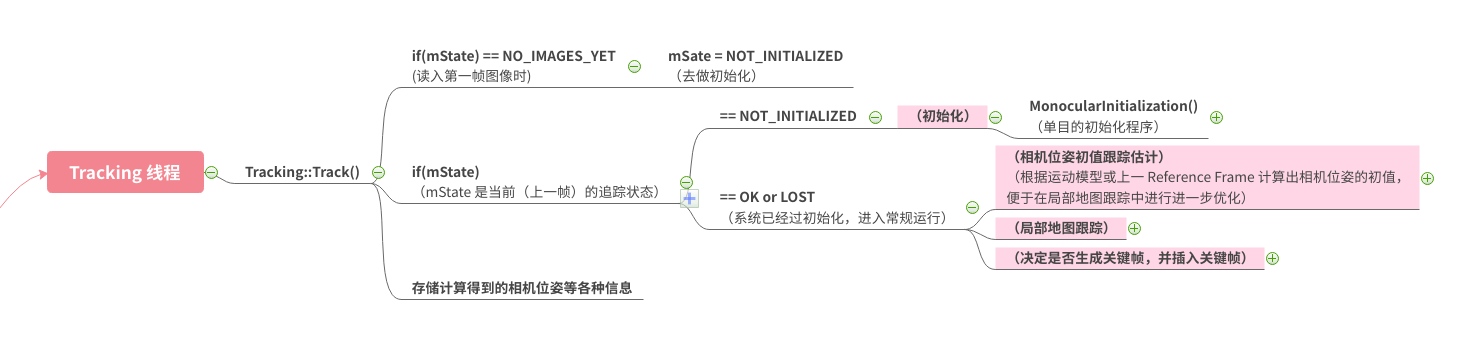

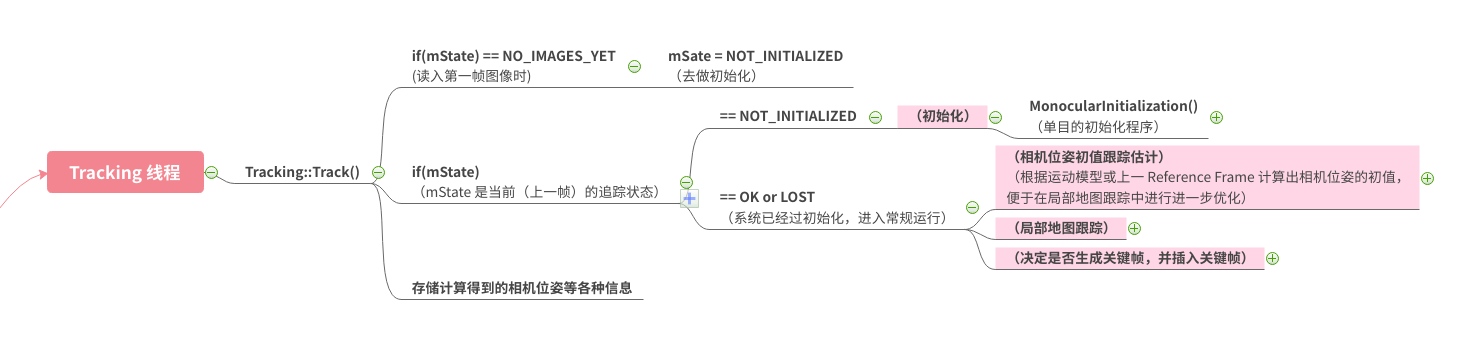

Tracking 线程:

- 对于新读取的帧,提取 ORB 特征

-(系统初始化)

- 当前帧位姿初值估计(根据上一帧 + motion-only BA,或进行重定位)

- 局部地图跟踪

- 对上一步得到的位姿初值进行进一步 BA 优化

- 局部地图:指 Covisibility Graph 中附近的 KFs 及其 MapPoints 所组成的局部的地图

- 决定是否将当前帧作为关键帧插入 Map

LocalMapping 线程:

- 接收从 Tracking 线程插入的 KF,并进行预处理

- 剔除质量较差的 MapPoints

- 通过三角化生成新的 MapPoints

- Current KF 未与现有 MapPoints 匹配的 ORB 特征点 与其 Covisible KFs 的特征点进行匹配,并- - 三角化

- Local BA

- 剔除冗余的局部关键帧

LoopClosing 线程:

- 接收 LoopClosing 送来的筛选处理后的 KF

- 检测出一批 Candidate KFs

- 计算 Sim3,确定最终的 Loop KF

- 进行回环融合

- 优化 Essential Graph

Essential Graph 指的是:系统会构造一个生成树。当一个新的 KF 插入时,将它与(与它观测到相同的 MapPoints 的数量最多的 KF)相连,从而得到一个生成树。Essential Graph = 该生成树 + Covisibility Graph 中权重大于100的边。

提出 Covisiblility Graph 的目的是以此来描述 KFs 之间的共视关系。但 Covisibility Graph 较为稠密,不便于全局优化,所以提出了 Essential Graph。从上图可以看出,Covisibility Graph 中的边很多,生成树就是一条线,而 Essential Graph 介于两者之间。

作者提供了一个代码导图: [程序导图](https://www.edrawsoft.cn/viewer/public/s/7cfe1019218402)

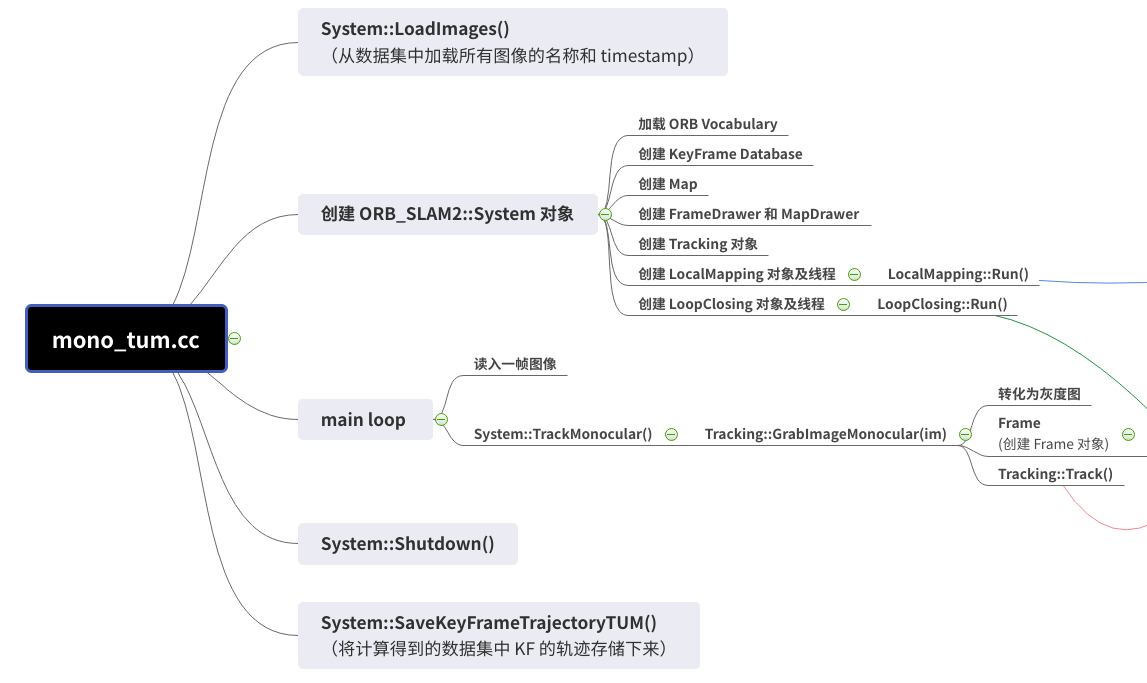

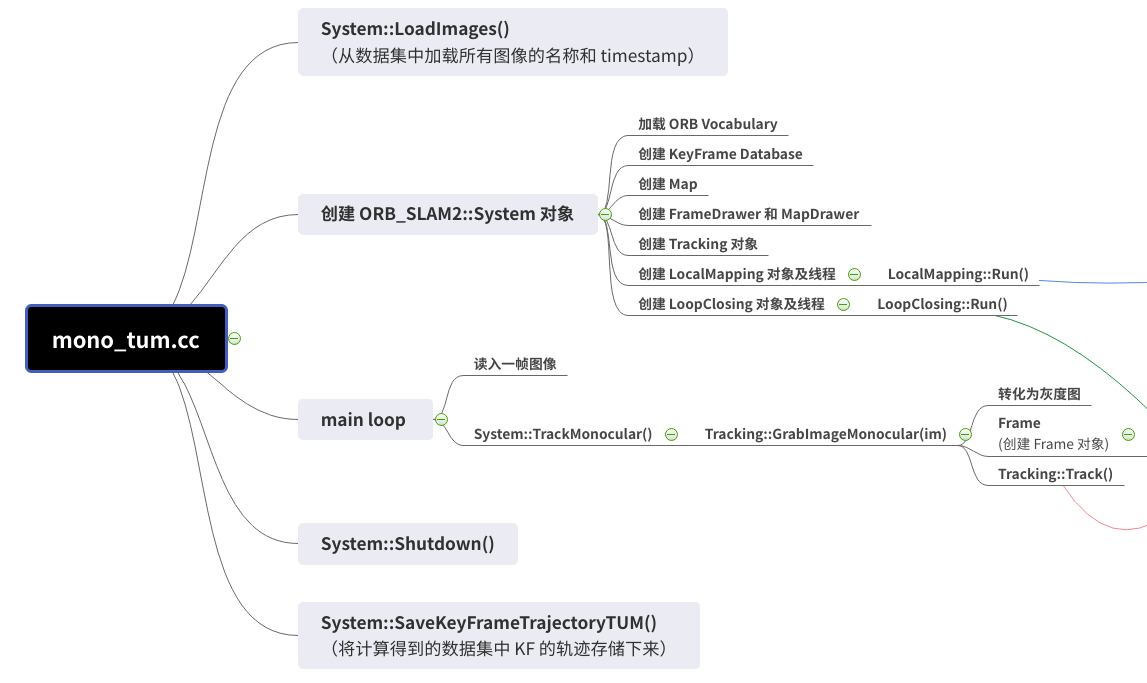

ORB-SLAM2 系统以 System.cc 为系统的入口,其负责创建各种对象,同时创建 Tracking,LocalMapping, LoopCLosing 三个线程并运行。其中,System::TrackMonocular()是启动 Tracking 线程的入口。Tracking 线程为主线程,而 LocalMapping 和 LoopClosing 线程是通过 new thread 创建的。

[ORB-SLAM2 论文&代码学习 —— Tracking 线程](https://www.cnblogs.com/MingruiYu/p/12352960.html)

ORB 特征提取

ORB 特征具有旋转不变性,但没有尺度不变性。为了减小尺度变化对于 ORB 特征的影响,ORB-SLAM 采用尺度金字塔的方式,将图像放大或缩小形成不同尺度(共8个,每个尺度之间的缩放比例为1.2),之后再在每个尺度的图像上都提取一遍 ORB 特征(提出 ORB 特征会带有一个标记,标记其是从哪个尺度提取出来的),将每个尺度提取出的 ORB 特征汇总在一起,就成为了该图像提取的 ORB 特征。

为了尽可能使得 提取的 ORB 特征在图像上分布均匀(ORB 特征提取本身存在一个问题,其在图像上分布不均,经常有的局部一大堆特征点,有的局部没有特征点),ORB-SLAM 将每个尺度的图像,划分成一个个小格格(切蛋糕了),在每个小格格上提取至少5个特征点。如果提取不出5个特征点,就将提取特征的阈值放低一些。

决定是否将当前帧作为 KeyFrame

- 距离上一次重定位已经过去了超过20帧

- LocalMapping 线程正闲置,但如果已经有连续20帧内没有插入过 KF 了,那么 LocalMapping 线程不管忙不忙,都要插入 KF 了

- 当前帧至少追踪了 50 个点(当前帧质量不能太差)

- 当前帧追踪的点中不能有90%以上和其 Reference KF 相同(当前帧不能太冗余)

---

# Resources

## [yepeichu123/orbslam2_learn](https://github.com/yepeichu123/orbslam2_learn)

Learn ORBSLAM2 and divide the source code into many parts according to their function which can be easily built by the learner from my blog: https://www.cnblogs.com/yepeichu/category/1356379.html.

# References

- CSDN - ORB-SLAM2论文解读与总结](https://blog.csdn.net/zxcqlf/article/details/80198298)

- [ORB-SLAM2 论文&代码学习-概览](https://www.cnblogs.com/MingruiYu/p/12347171.html)

- [ORB-SLAM2 论文全文翻译](https://www.cnblogs.com/MingruiYu/p/12991119.html)

- [一个基于深度学习回环检测模块的简单双目 SLAM 系统](https://www.cnblogs.com/MingruiYu/p/12634631.html)

- [rpng/calc Convolutional Autoencoder for Loop Closure](https://github.com/rpng/calc)

- [Mingrui-Yu/A-Simple-Stereo-SLAM-System-with-Deep-Loop-Closing](https://github.com/Mingrui-Yu/A-Simple-Stereo-SLAM-System-with-Deep-Loop-Closing)

# ORB SLAM and ORB SLAM2

网上有太多的论文解析,这里引用,整理一些比较容易理解的,概括性的解释。

关于论文的理解:

# ORB SLAM and ORB SLAM2

网上有太多的论文解析,这里引用,整理一些比较容易理解的,概括性的解释。

关于论文的理解:

---

引用 [CSDN - ORB-SLAM2论文解读与总结](https://blog.csdn.net/zxcqlf/article/details/80198298):

ORB-SLAM2贡献

系统架构:

---

引用自 [ORB-SLAM2 论文&代码学习-概览](https://www.cnblogs.com/MingruiYu/p/12347171.html):

ORB-SLAM 系统同时运行三个线程:

Tracking 线程:

- 对于新读取的帧,提取 ORB 特征

-(系统初始化)

- 当前帧位姿初值估计(根据上一帧 + motion-only BA,或进行重定位)

- 局部地图跟踪

- 对上一步得到的位姿初值进行进一步 BA 优化

- 局部地图:指 Covisibility Graph 中附近的 KFs 及其 MapPoints 所组成的局部的地图

- 决定是否将当前帧作为关键帧插入 Map

LocalMapping 线程:

- 接收从 Tracking 线程插入的 KF,并进行预处理

- 剔除质量较差的 MapPoints

- 通过三角化生成新的 MapPoints

- Current KF 未与现有 MapPoints 匹配的 ORB 特征点 与其 Covisible KFs 的特征点进行匹配,并- - 三角化

- Local BA

- 剔除冗余的局部关键帧

LoopClosing 线程:

- 接收 LoopClosing 送来的筛选处理后的 KF

- 检测出一批 Candidate KFs

- 计算 Sim3,确定最终的 Loop KF

- 进行回环融合

- 优化 Essential Graph

Essential Graph 指的是:系统会构造一个生成树。当一个新的 KF 插入时,将它与(与它观测到相同的 MapPoints 的数量最多的 KF)相连,从而得到一个生成树。Essential Graph = 该生成树 + Covisibility Graph 中权重大于100的边。

提出 Covisiblility Graph 的目的是以此来描述 KFs 之间的共视关系。但 Covisibility Graph 较为稠密,不便于全局优化,所以提出了 Essential Graph。从上图可以看出,Covisibility Graph 中的边很多,生成树就是一条线,而 Essential Graph 介于两者之间。

作者提供了一个代码导图: [程序导图](https://www.edrawsoft.cn/viewer/public/s/7cfe1019218402)

ORB-SLAM2 系统以 System.cc 为系统的入口,其负责创建各种对象,同时创建 Tracking,LocalMapping, LoopCLosing 三个线程并运行。其中,System::TrackMonocular()是启动 Tracking 线程的入口。Tracking 线程为主线程,而 LocalMapping 和 LoopClosing 线程是通过 new thread 创建的。

---

引用 [CSDN - ORB-SLAM2论文解读与总结](https://blog.csdn.net/zxcqlf/article/details/80198298):

ORB-SLAM2贡献

系统架构:

---

引用自 [ORB-SLAM2 论文&代码学习-概览](https://www.cnblogs.com/MingruiYu/p/12347171.html):

ORB-SLAM 系统同时运行三个线程:

Tracking 线程:

- 对于新读取的帧,提取 ORB 特征

-(系统初始化)

- 当前帧位姿初值估计(根据上一帧 + motion-only BA,或进行重定位)

- 局部地图跟踪

- 对上一步得到的位姿初值进行进一步 BA 优化

- 局部地图:指 Covisibility Graph 中附近的 KFs 及其 MapPoints 所组成的局部的地图

- 决定是否将当前帧作为关键帧插入 Map

LocalMapping 线程:

- 接收从 Tracking 线程插入的 KF,并进行预处理

- 剔除质量较差的 MapPoints

- 通过三角化生成新的 MapPoints

- Current KF 未与现有 MapPoints 匹配的 ORB 特征点 与其 Covisible KFs 的特征点进行匹配,并- - 三角化

- Local BA

- 剔除冗余的局部关键帧

LoopClosing 线程:

- 接收 LoopClosing 送来的筛选处理后的 KF

- 检测出一批 Candidate KFs

- 计算 Sim3,确定最终的 Loop KF

- 进行回环融合

- 优化 Essential Graph

Essential Graph 指的是:系统会构造一个生成树。当一个新的 KF 插入时,将它与(与它观测到相同的 MapPoints 的数量最多的 KF)相连,从而得到一个生成树。Essential Graph = 该生成树 + Covisibility Graph 中权重大于100的边。

提出 Covisiblility Graph 的目的是以此来描述 KFs 之间的共视关系。但 Covisibility Graph 较为稠密,不便于全局优化,所以提出了 Essential Graph。从上图可以看出,Covisibility Graph 中的边很多,生成树就是一条线,而 Essential Graph 介于两者之间。

作者提供了一个代码导图: [程序导图](https://www.edrawsoft.cn/viewer/public/s/7cfe1019218402)

ORB-SLAM2 系统以 System.cc 为系统的入口,其负责创建各种对象,同时创建 Tracking,LocalMapping, LoopCLosing 三个线程并运行。其中,System::TrackMonocular()是启动 Tracking 线程的入口。Tracking 线程为主线程,而 LocalMapping 和 LoopClosing 线程是通过 new thread 创建的。

[ORB-SLAM2 论文&代码学习 —— Tracking 线程](https://www.cnblogs.com/MingruiYu/p/12352960.html)

ORB 特征提取

ORB 特征具有旋转不变性,但没有尺度不变性。为了减小尺度变化对于 ORB 特征的影响,ORB-SLAM 采用尺度金字塔的方式,将图像放大或缩小形成不同尺度(共8个,每个尺度之间的缩放比例为1.2),之后再在每个尺度的图像上都提取一遍 ORB 特征(提出 ORB 特征会带有一个标记,标记其是从哪个尺度提取出来的),将每个尺度提取出的 ORB 特征汇总在一起,就成为了该图像提取的 ORB 特征。

为了尽可能使得 提取的 ORB 特征在图像上分布均匀(ORB 特征提取本身存在一个问题,其在图像上分布不均,经常有的局部一大堆特征点,有的局部没有特征点),ORB-SLAM 将每个尺度的图像,划分成一个个小格格(切蛋糕了),在每个小格格上提取至少5个特征点。如果提取不出5个特征点,就将提取特征的阈值放低一些。

决定是否将当前帧作为 KeyFrame

- 距离上一次重定位已经过去了超过20帧

- LocalMapping 线程正闲置,但如果已经有连续20帧内没有插入过 KF 了,那么 LocalMapping 线程不管忙不忙,都要插入 KF 了

- 当前帧至少追踪了 50 个点(当前帧质量不能太差)

- 当前帧追踪的点中不能有90%以上和其 Reference KF 相同(当前帧不能太冗余)

[ORB-SLAM2 论文&代码学习 —— Tracking 线程](https://www.cnblogs.com/MingruiYu/p/12352960.html)

ORB 特征提取

ORB 特征具有旋转不变性,但没有尺度不变性。为了减小尺度变化对于 ORB 特征的影响,ORB-SLAM 采用尺度金字塔的方式,将图像放大或缩小形成不同尺度(共8个,每个尺度之间的缩放比例为1.2),之后再在每个尺度的图像上都提取一遍 ORB 特征(提出 ORB 特征会带有一个标记,标记其是从哪个尺度提取出来的),将每个尺度提取出的 ORB 特征汇总在一起,就成为了该图像提取的 ORB 特征。

为了尽可能使得 提取的 ORB 特征在图像上分布均匀(ORB 特征提取本身存在一个问题,其在图像上分布不均,经常有的局部一大堆特征点,有的局部没有特征点),ORB-SLAM 将每个尺度的图像,划分成一个个小格格(切蛋糕了),在每个小格格上提取至少5个特征点。如果提取不出5个特征点,就将提取特征的阈值放低一些。

决定是否将当前帧作为 KeyFrame

- 距离上一次重定位已经过去了超过20帧

- LocalMapping 线程正闲置,但如果已经有连续20帧内没有插入过 KF 了,那么 LocalMapping 线程不管忙不忙,都要插入 KF 了

- 当前帧至少追踪了 50 个点(当前帧质量不能太差)

- 当前帧追踪的点中不能有90%以上和其 Reference KF 相同(当前帧不能太冗余)

---

# Resources

## [yepeichu123/orbslam2_learn](https://github.com/yepeichu123/orbslam2_learn)

Learn ORBSLAM2 and divide the source code into many parts according to their function which can be easily built by the learner from my blog: https://www.cnblogs.com/yepeichu/category/1356379.html.

# References

- CSDN - ORB-SLAM2论文解读与总结](https://blog.csdn.net/zxcqlf/article/details/80198298)

- [ORB-SLAM2 论文&代码学习-概览](https://www.cnblogs.com/MingruiYu/p/12347171.html)

- [ORB-SLAM2 论文全文翻译](https://www.cnblogs.com/MingruiYu/p/12991119.html)

- [一个基于深度学习回环检测模块的简单双目 SLAM 系统](https://www.cnblogs.com/MingruiYu/p/12634631.html)

- [rpng/calc Convolutional Autoencoder for Loop Closure](https://github.com/rpng/calc)

- [Mingrui-Yu/A-Simple-Stereo-SLAM-System-with-Deep-Loop-Closing](https://github.com/Mingrui-Yu/A-Simple-Stereo-SLAM-System-with-Deep-Loop-Closing)

---

# Resources

## [yepeichu123/orbslam2_learn](https://github.com/yepeichu123/orbslam2_learn)

Learn ORBSLAM2 and divide the source code into many parts according to their function which can be easily built by the learner from my blog: https://www.cnblogs.com/yepeichu/category/1356379.html.

# References

- CSDN - ORB-SLAM2论文解读与总结](https://blog.csdn.net/zxcqlf/article/details/80198298)

- [ORB-SLAM2 论文&代码学习-概览](https://www.cnblogs.com/MingruiYu/p/12347171.html)

- [ORB-SLAM2 论文全文翻译](https://www.cnblogs.com/MingruiYu/p/12991119.html)

- [一个基于深度学习回环检测模块的简单双目 SLAM 系统](https://www.cnblogs.com/MingruiYu/p/12634631.html)

- [rpng/calc Convolutional Autoencoder for Loop Closure](https://github.com/rpng/calc)

- [Mingrui-Yu/A-Simple-Stereo-SLAM-System-with-Deep-Loop-Closing](https://github.com/Mingrui-Yu/A-Simple-Stereo-SLAM-System-with-Deep-Loop-Closing)

No comments