## RGDB AR Demo

- Source code:

```cpp

#include

## RGDB AR Demo

- Source code:

```cpp

#include ORB-SLAM3 AR Demo

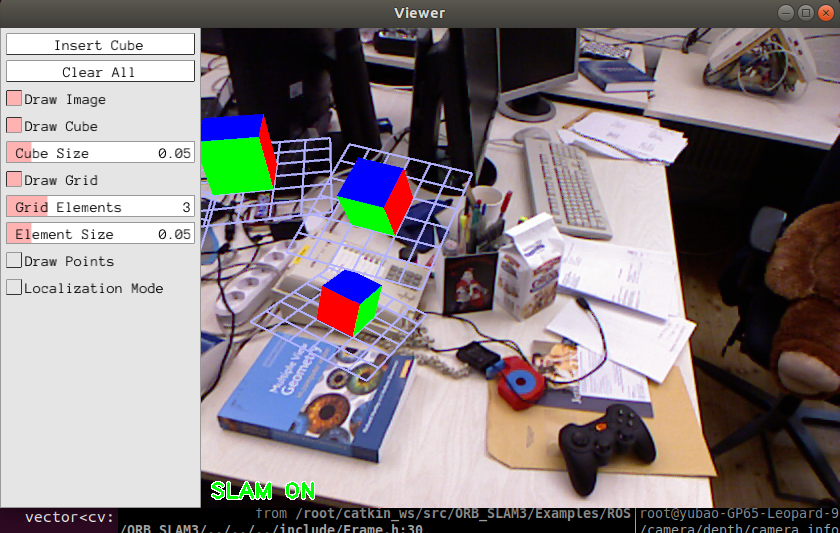

# ORB-SLAM3 AR Demo

## Monocular

- Compile ORB-SLAM3 and make sure it can run in ROS

```sh

cd [PROJECT]

catkin_make

```

- Run ORB-SLAM3 MonoAR node

```sh

$rosrun orb_slam3 MonoAR Vocabulary/ORBvoc.tx Examples/ROS/ORB_SLAM3/config/ros_tum.yaml /camera/image_raw:=/camera/rgb/image_color

```

- Play dataset

For example, TUM1:

```sh

rosbag play --clock rgbd_dataset_freiburg1_room.bag --hz=1

```

- Insert virtual 3D object

## RGDB AR Demo

- Source code:

```cpp

#include

## RGDB AR Demo

- Source code:

```cpp

#include

#include

#include

#include

#include <cv_bridge/cv_bridge.h>

#include <message_filters/subscriber.h>

#include <message_filters/sync_policies/approximate_time.h>

#include <message_filters/time_synchronizer.h>

#include <ros/ros.h>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include "../../../include/System.h"

#include "ViewerAR.h"

using namespace std;

ORB_SLAM3::ViewerAR viewerAR;

bool bRGB = true;

cv::Mat K;

cv::Mat DistCoef;

class ImageGrabber {

public:

ImageGrabber(ORB_SLAM3::System* pSLAM)

: mpSLAM(pSLAM)

{

}

void GrabRGBD(const sensor_msgs::ImageConstPtr& msgRGB, const sensor_msgs::ImageConstPtr& msgD);

ORB_SLAM3::System* mpSLAM;

};

int main(int argc, char** argv)

{

ros::init(argc, argv, "Mono");

ros::start();

if (argc != 3) {

cerr << endl

<< "Usage: rosrun ORB_SLAM3 RGBD path_to_vocabulary path_to_settings" << endl;

ros::shutdown();

return 1;

}

// Create SLAM system. It initializes all system threads and gets ready to process frames.

ORB_SLAM3::System SLAM(argv[1], argv[2], ORB_SLAM3::System::RGBD, false);

cout << endl

<< endl;

cout << "-----------------------" << endl;

cout << "Augmented Reality Demo" << endl;

cout << "1) Translate the camera to initialize SLAM." << endl;

cout << "2) Look at a planar region and translate the camera." << endl;

cout << "3) Press Insert Cube to place a virtual cube in the plane. " << endl;

cout << endl;

cout << "You can place several cubes in different planes." << endl;

cout << "-----------------------" << endl;

cout << endl;

viewerAR.SetSLAM(&SLAM);

ImageGrabber igb(&SLAM);

ros::NodeHandle nh;

// ros::Subscriber sub = nh.subscribe("/camera/image_raw", 1, &ImageGrabber::GrabRGBD, &igb);

message_filters::Subscriber rgb_sub(nh, "/camera/rgb/image_color", 1);

message_filters::Subscriber depth_sub(nh, "/camera/depth/image", 1);

typedef message_filters::sync_policies::ApproximateTime sync_pol;

message_filters::Synchronizer sync(sync_pol(10), rgb_sub, depth_sub);

sync.registerCallback(boost::bind(&ImageGrabber::GrabRGBD, &igb, _1, _2));

cv::FileStorage fSettings(argv[2], cv::FileStorage::READ);

bRGB = static_cast((int)fSettings["Camera.RGB"]);

float fps = fSettings["Camera.fps"];

viewerAR.SetFPS(fps);

float fx = fSettings["Camera.fx"];

float fy = fSettings["Camera.fy"];

float cx = fSettings["Camera.cx"];

float cy = fSettings["Camera.cy"];

viewerAR.SetCameraCalibration(fx, fy, cx, cy);

K = cv::Mat::eye(3, 3, CV_32F);

K.at(0, 0) = fx;

K.at(1, 1) = fy;

K.at(0, 2) = cx;

K.at(1, 2) = cy;

DistCoef = cv::Mat::zeros(4, 1, CV_32F);

DistCoef.at(0) = fSettings["Camera.k1"];

DistCoef.at(1) = fSettings["Camera.k2"];

DistCoef.at(2) = fSettings["Camera.p1"];

DistCoef.at(3) = fSettings["Camera.p2"];

const float k3 = fSettings["Camera.k3"];

if (k3 != 0) {

DistCoef.resize(5);

DistCoef.at(4) = k3;

}

thread tViewer = thread(&ORB_SLAM3::ViewerAR::Run, &viewerAR);

ros::spin();

// Stop all threads

SLAM.Shutdown();

// Save camera trajectory

SLAM.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectory.txt");

ros::shutdown();

return 0;

}

void ImageGrabber::GrabRGBD(const sensor_msgs::ImageConstPtr& msgRGB, const sensor_msgs::ImageConstPtr& msgD)

{

// Copy the ros image message to cv::Mat.

cv_bridge::CvImageConstPtr cv_ptrRGB;

try {

cv_ptrRGB = cv_bridge::toCvShare(msgRGB);

} catch (cv_bridge::Exception& e) {

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

cv_bridge::CvImageConstPtr cv_ptrD;

try {

cv_ptrD = cv_bridge::toCvShare(msgD);

} catch (cv_bridge::Exception& e) {

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

cv::Mat im = cv_ptrRGB->image.clone();

cv::Mat imu;

cv::Mat Tcw = mpSLAM->TrackRGBD(cv_ptrRGB->image, cv_ptrD->image, cv_ptrRGB->header.stamp.toSec());

int state = mpSLAM->GetTrackingState();

vector vMPs = mpSLAM->GetTrackedMapPoints();

vector vKeys = mpSLAM->GetTrackedKeyPointsUn();

cv::undistort(im, imu, K, DistCoef);

if (bRGB)

viewerAR.SetImagePose(imu, Tcw, state, vKeys, vMPs);

else {

cv::cvtColor(imu, imu, CV_RGB2BGR);

viewerAR.SetImagePose(imu, Tcw, state, vKeys, vMPs);

}

}

```

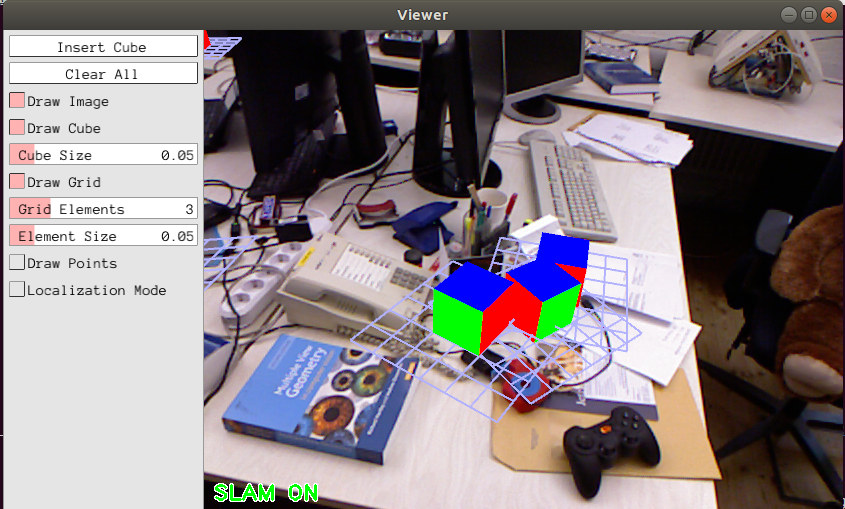

- RGBD AR Demo

## USB camera

```sh

Augmented Reality Demo

1) Translate the camera to initialize SLAM.

2) Look at a planar region and translate the camera.

3) Press Insert Cube to place a virtual cube in the plane.

```

## RGDB AR Demo

- Source code:

```cpp

#include

## RGDB AR Demo

- Source code:

```cpp

#include

No comments